Role: Prototyper/Mobile Application Developer

Tools and Libraries: Android Studio, Google Play Services (for face detection)

GUI Programming Concepts: Input devices, input events, finite state machine, model-view-presenter for Android

This was a final requirement for Software Structures for User Interfaces, a core subject I took when I was completing my Master’s degree in Human-Computer Interaction from Carnegie Mellon University. Parts of the code from the googly-eyes sample were used in this project.

Introduction

An alarm clock that wakes you up by using face detection to force you to keep your eyes open. Sounds crazy? What if I told you that it also takes snapshots of you while you struggle to wake up, keeping them hostage and potentially posting them to social media (with the hashtag #wokeuplikethis, of course) if you hit snooze or fail to wake up? Let’s see if that doesn’t make you want to wake up.

Problem Space

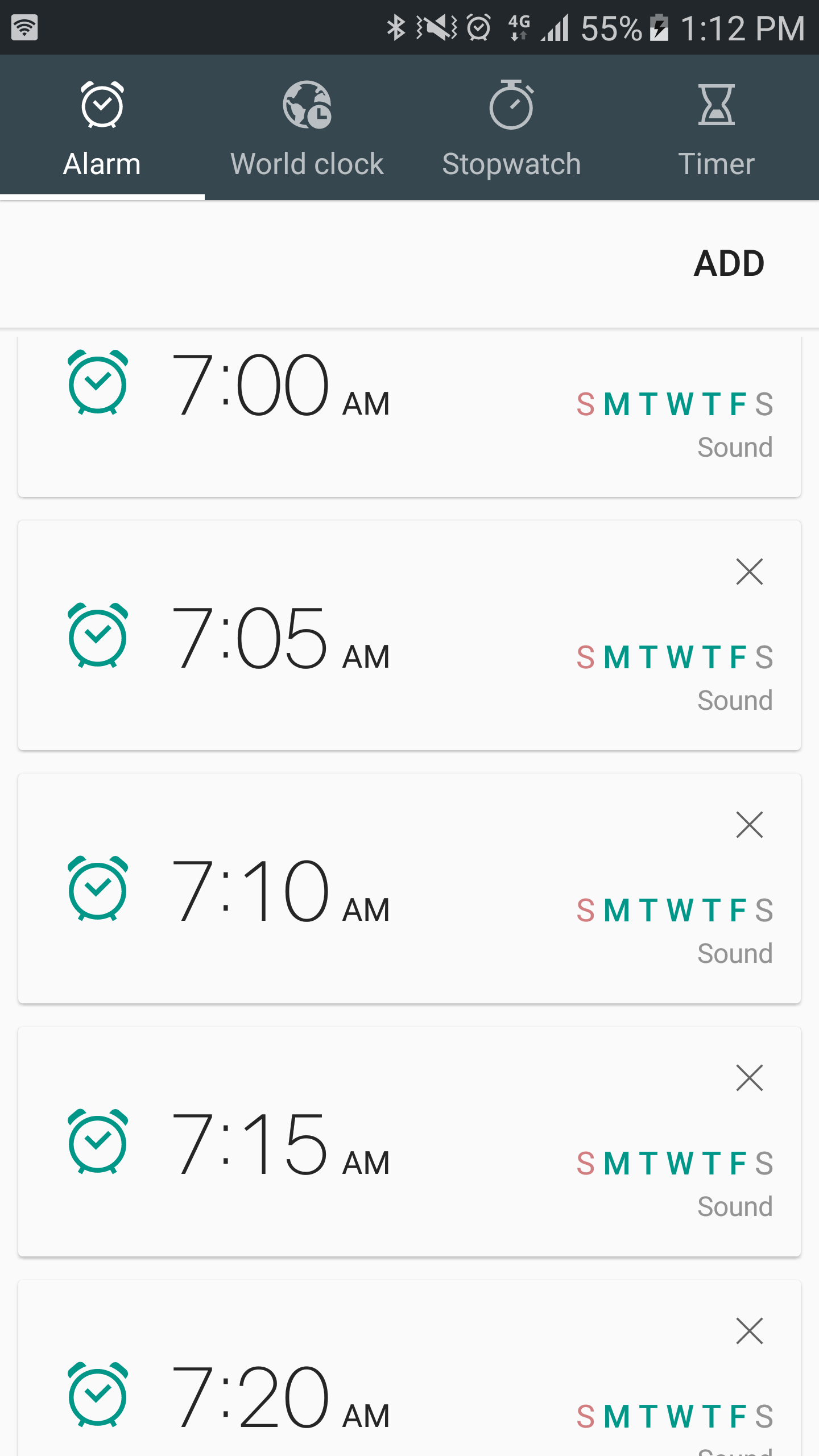

I'm the type of person who struggles with waking up in the morning. I have this tendency of silencing my alarm clock in a matter of seconds then falling back to sleep immediately. If I wanted to actually get out of bed, I needed find a way to keep myself awake longer. And that's when it hit me.

What's a sure way of keeping me awake?

Opening my eyes.

What's a good way of keeping my eyes open?

Requiring me to open my eyes in order to turn off the alarm clock.

How can I do that?

Face detection.

Implementation

The point of the project was for us to apply the concepts that we learned from our lectures. I applied 3 main lecture concepts: Interaction Techniques, Input Events and Finite State Machines.

Interaction Techniques

Interaction techniques are software-level tricks that GUI designers use to take a new spin on an existing input device. An example of this would be how the Swype keyboard enhances touchscreen text input with it's gesture typing – it's using the same touchscreen but it can be significantly faster to type on.

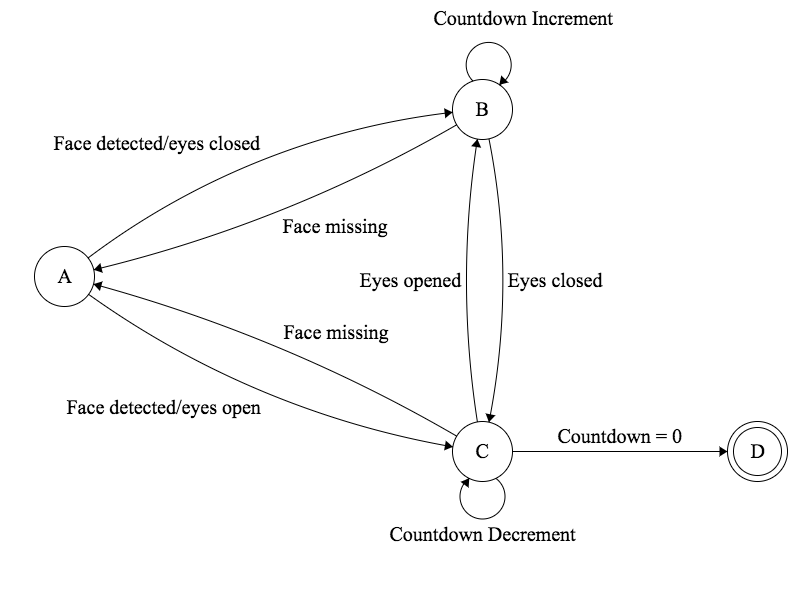

In my case, I used face detection as my interaction technique and the front facing camera as my input device. By tracking the user's eye, I can determine if he is falling back to sleep or not and can react accordingly. If his eyes are open, that means he's awake so I lower the volume and start the countdown timer. If his eyes are closed or if his face is missing, I reset the volume and timer back to 100%.

Input Events

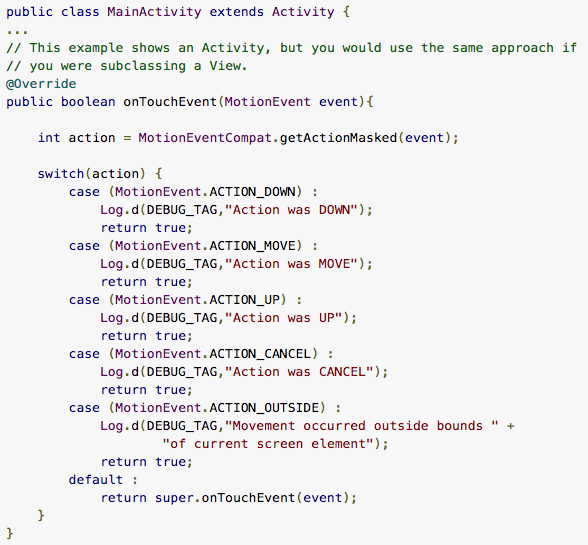

Input events are a type of abstraction provided by toolkits that make life easier for application developers. You see for button-type events like mouse clicks, it’s fairly easy to determine if the user made a right click or a left click. There are however more complicated input devices that may generate noisy data, hard to analyze data, or both. Touchscreen gestures are a great example of this: think about it, what distinguishes a tap and drag from a fling/flick event? Velocity. A tap and drag has low velocity while the opposite is true for fling events. You’d have to take in x and y coordinates together with deltas (change over time) to determine that.

For my case, the camera was feeding me continuous frames of faces, each with a probability of whether or not the eyes are closed or not. Using a finite state machine, I was able to abstract these into three simple input events: EYE_OPEN, EYE_CLOSED and FACE_MISSING.

Finite State Machine

My interaction technique involved handling 3 states: open eyes, closed eyes, and face missing. Like any UI component, it’s best to create a finite state machine to make it easier to handle input. Mine ended up looking like this:

I used this finite state machine to determine when to send out input events, that is, whenever there is a state transition.

Initial Prototype and Nielsen’s Heuristics

The initial prototype I made had two circular rings that represent volume and the awake countdown. I was hoping that this setup was intuitive enough for my testers since I thought having the rings react to blinking was enough feedback for them to determine what's happening. Apparently, it wasn't. They get the feedback, but they don't know what that feedback meant. So I had to think of a way to convey to the user that one ring represents the volume level and the other is tracking whether or not their eyes were open.

Final Prototype and Future Improvements

The final prototype wasn't a huge departure from the original design. I incorporated the feedback from the initial prototype and added icons to better indicate what the progress circles meant and also added a help button as a fallback in case users are completely clueless.

Some of the future improvements I'm considering to add are:

Capturing frames and uploading to social media

Changing alarm tones

Repeating alarms

Setting alarms upon booting